See beyond the visible in endoscopy

through augmented perception, reasoning, modelling and action

Coordinator (person and lab): Sandrine Voros, TIMC-IMAG / CAMI group

CAMI Partners : LTSI laboratory (Rennes)

Started: October 2016 (1st recruitment)

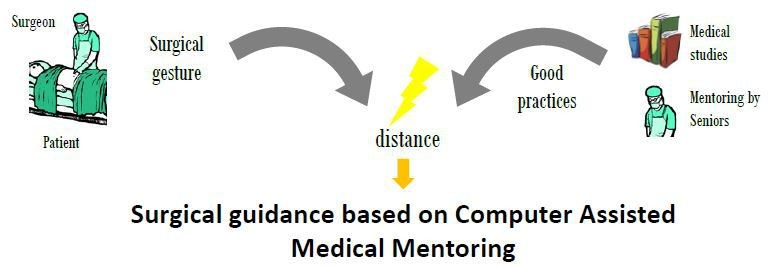

Motivations and objectives:

Surgical endoscopy is a minimally invasive surgical technique for surgeries on abdominal organs. This type of surgery offers numerous benefits for the patient (less bleeding, transfusions, shorter intervention time), but it increases the complexity of the surgeon’s task, since he doesn’t have a direct vision of the operative site. To assist the surgeon in his surgical gesture, two types of robotic systems have been developed: robotic endoscope holders (such as Endocontrol’s ViKY® robot), and complete telesurgery systems (such as Intuitive Surgical’s da Vinci ™ robot), which offer a comfort close to open surgery in a minimally invasive environment.

Today, only the da Vinci™ system is used on a large scale in hospitals (~5000 systems installed worldwide as of 2017), however, its clinical added value for the patient remains controversial compared to its cost [1].

On the other hand, CAMI systems allow to compute the distance between the performed surgical gesture and the surgical planning (e.g. control the trajectory of a drilling tool), and thus offer the opportunity to actually quantify the Delivered Medical Service to patients. While these distance measurements and their link to clinical outcomes are relatively easy to make for bone surgery (in short, planning can be made on pre-operative images, and the tools trajectories are somewhat simple), surgical endoscopy is a more complex case. Indeed, it concerns soft tissues, so if pre-operative data is available, it rapidly becomes false; moreover, defining the “good clinical practice” is more complex than comparing the actual (often straight) trajectory of a drilling tool to its planned trajectory.

4 needs must be fulfilled to provide a CAMI system for surgical endoscopy

-

Capture in real-time the position of the surgeon’s instruments and information about the targets of the surgical gesture (anatomical structures)

-

Transform this information into metrics that characterize the surgical practice

-

Establish quantified definitions of the “good surgical practice” that the surgeon wants to apply to treat his patient optimally

-

Provide the surgeon with user interfaces allowing him to visualize the information that will help him minimize the distance between his surgical practice and the gold standard practice(s)

We (TIMC-IMAG) proposed solutions for those needs in the context of surgical endoscopy, within the Integrated Project “Augmented Endoscopy”.

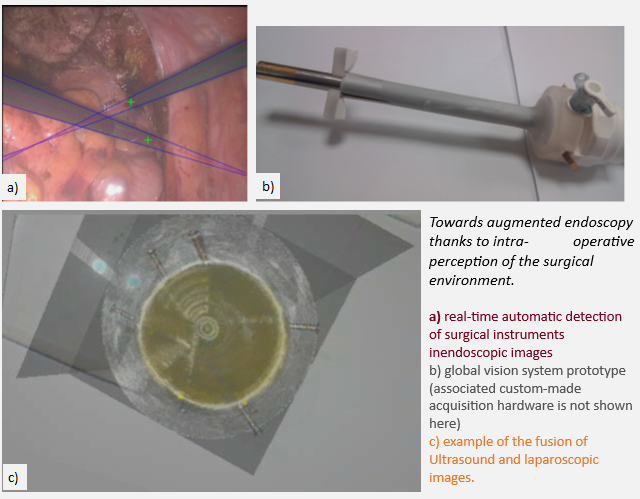

Regarding augmented perception and reasoning, we have proposed methods for image-based tool tracking, based on classical image processing approaches [CARE15, TTPatent] (winner of the 2015 MICCAI EndoVis Grand Challenge, tool tracking category), and deep-learning based approaches (winner of the 2021 MICCAI EndoVis Grand Challenge, semantic segmentation category).

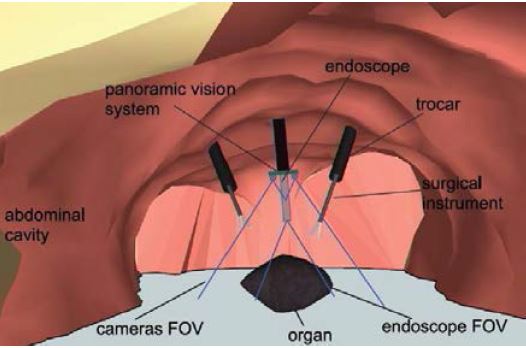

We have also developed a prototype of a “global vision system” (GVS) which adds mini-cameras to the classical endoscope, in order to increase the laparoscopic field of view and help the surgeon better visualize the surgical scene. The potential benefit of this prototype for surgical practice was evaluated through testbench experiments [IRBM22], animal experiments [SurgEnd2021]. We also addressed human-machine interface issues by investigating the feasibility of performing a mosaicking of the views of the different cameras [JIm22].

We also worked on devices and methods to allow fusion of laparoscopic and ultrasound images during radical prostatectomy (one of the most challenging urologic interventions) [JUro16].

These devices and methods will allow to solve needs 1) and 2) with a better perception of the environment and a fused environment where metrics can be extracted in a single referential to capture surgical gestures in their context.

Regarding need 3), we worked on more upstream activities led in collaboration with the LTSI in Rennes concerning the modelling of surgical procedures to better understand and define “good surgical practice” [JBI16, AIM16, IJCARS20, IJCARS21].

Our objective in the frame of this integrated project is to foster on those existing bricks in order to provide bimodal navigation systems (GVS or ultrasound + endoscopic images) that are ready for -clinical evaluations. Regarding the modelling of surgical procedures, our objective is to demonstrate that exploiting such augmented information in addition to the workflow of surgical activities can enhance the understanding of surgical expertise.

Transfer:

[TTPatent] Device and method for automatically detecting a surgical tool on an image provided by a medical imaging system, 2015 FR3039910B1, 2016 US10671872B2, EPEP3332354A1, WO2017021654A1.

Main publications: N/A for the moment

[SurgEnd21] Trilling, B., Mancini, A., Fiard, G. et al. Improving vision for surgeons during laparoscopy: the Enhanced Laparoscopic Vision System (ELViS). Surg Endosc 35, 2403–2415 (2021). https://doi.org/10.1007/s00464-021-08369-2.

⟨inserm-03208307⟩

[IRBM22] B. Trilling, S. Vijayan, C. Goupil, E. Kedisseh, A. Letouzey, P.A. Barraud, J.L. Faucheron, G. Fiard, S. Voros, Enhanced Laparoscopic Vision Improves Detection of Intraoperative Adverse Events During Laparoscopy, IRBM,Volume 43, Issue 2, 2022, 93-99. https://doi.org/10.1016/j.irbm.2020.12.001.

⟨hal-03054368⟩

[JIm22] Guy, S.; Haberbusch, J.-L.; Promayon, E.; Mancini, S.; Voros, S. Qualitative Comparison of Image Stitching Algorithms for Multi-Camera Systems in Laparoscopy. J. Imaging 2022, 8, 52. https://doi.org/10.3390/jimaging8030052.

⟨hal-03586852⟩

[JUro16] Cécilia Lanchon, Guillaume Custillon, Alexandre Moreau-Gaudry, Jean-Luc Descotes, Jean-Alexandre Long, et al.. Augmented Reality Using Transurethral Ultrasound for Laparoscopic Radical Prostatectomy: Preclinical Evaluation. Journal of Urology, 2016, 196 (1), pp.244-50. ⟨10.1016/j.juro.2016.01.094⟩.

⟨hal-01424500⟩

[JBI6] Arnaud Huaulmé, Sandrine Voros, Laurent Riffaud, Germain Forestier, Alexandre Moreau-Gaudry, et al.. Distinguishing surgical behavior by sequential pattern discovery. Journal of Biomedical Informatics, 2017, 67, pp.34 – 41. ⟨10.1016/j.jbi.2017.02.001⟩.

⟨hal-01470402⟩

[AIM16] Arnaud Huaulmé, Pierre Jannin, Fabian Reche, Jean-Luc Faucheron, Alexandre Moreau-Gaudry, et al.. Offline identification of surgical deviations in laparoscopic rectopexy. Artificial Intelligence in Medicine, 2020, 104, pp.101837. ⟨10.1016/j.artmed.2020.101837⟩.

⟨hal-02510803⟩

[IJCARS20] Arthur Derathé, Fabian Reche, Alexandre Moreau-Gaudry, Pierre Jannin, Bernard Gibaud, et al.. Predicting the quality of surgical exposure using spatial and procedural features from laparoscopic videos. International Journal of Computer Assisted Radiology and Surgery, 2020, 15 (1), pp.59-67. ⟨10.1007/s11548-019-02072-3⟩.

⟨hal-02353077⟩

[IJCARS21] Arthur Derathé, Fabian Reche, Pierre Jannin, Alexandre Moreau-Gaudry, Bernard Gibaud, et al.. Explaining a model predicting quality of surgical practice: a first presentation to and review by clinical experts. International Journal of Computer Assisted Radiology and Surgery, 2021, 16 (11), pp.2009-2019. ⟨10.1007/s11548-021-02422-0⟩.

⟨hal-03264830⟩

[1] Yaxley, John W et al. Robot-assisted laparoscopic prostatectomy versus open radical retropubic prostatectomy: early outcomes from a randomised controlled phase 3 study. The Lancet , Volume 388 , Issue 10049 , 1057 – 1066.

![]()

![]()